Store Item Demand Kaggle

Store item Demand Forecasting Challenge

%matplotlib inline

%reload_ext autoreload

%autoreload 2

Import fastai libraries to use columnar data in DNN

from fastai.structured import *

from fastai.column_data import *

np.set_printoptions(threshold=50, edgeitems=20)

PATH='/home/adrianrdzv/Documentos/fastai/fastai/data/forecasting/'

Feature Space:

- train: Los datos de entranamiento proporcionados por Kaggle

- submmission: ejemplo de salida

- test: los datos de salida que generaremos

Analyze data previous to data cleansing

table_names = ['train', 'test']

tables = [pd.read_csv(f'{PATH}{fname}.csv', low_memory=False) for fname in table_names]

from IPython.display import HTML

for t in tables: display(t.head())

| date | store | item | sales | |

|---|---|---|---|---|

| 0 | 2013-01-01 | 1 | 1 | 13 |

| 1 | 2013-01-02 | 1 | 1 | 11 |

| 2 | 2013-01-03 | 1 | 1 | 14 |

| 3 | 2013-01-04 | 1 | 1 | 13 |

| 4 | 2013-01-05 | 1 | 1 | 10 |

| id | date | store | item | |

|---|---|---|---|---|

| 0 | 0 | 2018-01-01 | 1 | 1 |

| 1 | 1 | 2018-01-02 | 1 | 1 |

| 2 | 2 | 2018-01-03 | 1 | 1 |

| 3 | 3 | 2018-01-04 | 1 | 1 |

| 4 | 4 | 2018-01-05 | 1 | 1 |

for t in tables: display(DataFrameSummary(t).summary())

| date | store | item | sales | |

|---|---|---|---|---|

| count | NaN | 913000 | 913000 | 913000 |

| mean | NaN | 5.5 | 25.5 | 52.2503 |

| std | NaN | 2.87228 | 14.4309 | 28.8011 |

| min | NaN | 1 | 1 | 0 |

| 25% | NaN | 3 | 13 | 30 |

| 50% | NaN | 5.5 | 25.5 | 47 |

| 75% | NaN | 8 | 38 | 70 |

| max | NaN | 10 | 50 | 231 |

| counts | 913000 | 913000 | 913000 | 913000 |

| uniques | 1826 | 10 | 50 | 213 |

| missing | 0 | 0 | 0 | 0 |

| missing_perc | 0% | 0% | 0% | 0% |

| types | categorical | numeric | numeric | numeric |

| id | date | store | item | |

|---|---|---|---|---|

| count | 45000 | NaN | 45000 | 45000 |

| mean | 22499.5 | NaN | 5.5 | 25.5 |

| std | 12990.5 | NaN | 2.87231 | 14.431 |

| min | 0 | NaN | 1 | 1 |

| 25% | 11249.8 | NaN | 3 | 13 |

| 50% | 22499.5 | NaN | 5.5 | 25.5 |

| 75% | 33749.2 | NaN | 8 | 38 |

| max | 44999 | NaN | 10 | 50 |

| counts | 45000 | 45000 | 45000 | 45000 |

| uniques | 45000 | 90 | 10 | 50 |

| missing | 0 | 0 | 0 | 0 |

| missing_perc | 0% | 0% | 0% | 0% |

| types | numeric | categorical | numeric | numeric |

Data Cleaning / Feature Engineering

Adjusting the data to be use in the DNN

train,test = tables

len(train),len(test)

(913000, 45000)

La siguiente función “add_datepart” nos proporciona una gran variedad de variables temporales que pueden capturar comportamientos de estacioanlidad y demas caracteristicas temporales en los datos

The next function “add_datepart” will give us a variety of temporary variables who captures seasonality behaviours and stuff related to time series

add_datepart(train, "date", drop=False)

add_datepart(test, "date", drop=False)

train.head()

| date | store | item | sales | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2013-01-01 | 1 | 1 | 13 | 2013 | 1 | 1 | 1 | 1 | 1 | False | True | False | True | False | True | 1356998400 |

| 1 | 2013-01-02 | 1 | 1 | 11 | 2013 | 1 | 1 | 2 | 2 | 2 | False | False | False | False | False | False | 1357084800 |

| 2 | 2013-01-03 | 1 | 1 | 14 | 2013 | 1 | 1 | 3 | 3 | 3 | False | False | False | False | False | False | 1357171200 |

| 3 | 2013-01-04 | 1 | 1 | 13 | 2013 | 1 | 1 | 4 | 4 | 4 | False | False | False | False | False | False | 1357257600 |

| 4 | 2013-01-05 | 1 | 1 | 10 | 2013 | 1 | 1 | 5 | 5 | 5 | False | False | False | False | False | False | 1357344000 |

test.head()

| id | date | store | item | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 2018-01-01 | 1 | 1 | 2018 | 1 | 1 | 1 | 0 | 1 | False | True | False | True | False | True | 1514764800 |

| 1 | 1 | 2018-01-02 | 1 | 1 | 2018 | 1 | 1 | 2 | 1 | 2 | False | False | False | False | False | False | 1514851200 |

| 2 | 2 | 2018-01-03 | 1 | 1 | 2018 | 1 | 1 | 3 | 2 | 3 | False | False | False | False | False | False | 1514937600 |

| 3 | 3 | 2018-01-04 | 1 | 1 | 2018 | 1 | 1 | 4 | 3 | 4 | False | False | False | False | False | False | 1515024000 |

| 4 | 4 | 2018-01-05 | 1 | 1 | 2018 | 1 | 1 | 5 | 4 | 5 | False | False | False | False | False | False | 1515110400 |

columns = ["date"]

df = train[columns]

df = df.set_index("date")

df.reset_index(inplace=True)

df.head()

| date | |

|---|---|

| 0 | 2013-01-01 |

| 1 | 2013-01-02 |

| 2 | 2013-01-03 |

| 3 | 2013-01-04 |

| 4 | 2013-01-05 |

test = test.set_index("date")

test.reset_index(inplace=True)

test.head()

| date | id | store | item | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2018-01-01 | 0 | 1 | 1 | 2018 | 1 | 1 | 1 | 0 | 1 | False | True | False | True | False | True | 1514764800 |

| 1 | 2018-01-02 | 1 | 1 | 1 | 2018 | 1 | 1 | 2 | 1 | 2 | False | False | False | False | False | False | 1514851200 |

| 2 | 2018-01-03 | 2 | 1 | 1 | 2018 | 1 | 1 | 3 | 2 | 3 | False | False | False | False | False | False | 1514937600 |

| 3 | 2018-01-04 | 3 | 1 | 1 | 2018 | 1 | 1 | 4 | 3 | 4 | False | False | False | False | False | False | 1515024000 |

| 4 | 2018-01-05 | 4 | 1 | 1 | 2018 | 1 | 1 | 5 | 4 | 5 | False | False | False | False | False | False | 1515110400 |

Para poder hacer uso de las funciones de la red neuronal debemos identificar que variables trataremos como categoricas y cuales como continuas, para este caso todas las trataremos como categoricas

#cat_vars = ['store', 'item', 'Dayofweek', 'Year', 'Month', 'Day']

cat_vars = ['store','item', 'Year', 'Month', 'Week', 'Day','Dayofweek', 'Dayofyear', 'Is_month_end', 'Is_month_start','Is_quarter_end', 'Is_quarter_start', 'Is_year_end', 'Is_year_start']

contin_vars = []

n = len(test); n

45000

dep = 'sales'

test[dep]=0

join_test=test.copy() # Pendiente eliminar

Debemos modificar explicitamente el dataframe para que cada variable categorica se exprese como tal, dado que asi espera las funciones

We need to modify explictly the dataframe in order to every categorical variable be tagged as categorical, and the same for continuos

for v in cat_vars: test[v] = test[v].astype('category').cat.as_ordered()

for v in cat_vars: train[v] = train[v].astype('category').cat.as_ordered()

for v in contin_vars:

train[v] = train[v].astype('float32')

test[v] = test[v].astype('float32')

We are going to use the full dataset to train our model

samp_size = len(train)

joined_samp = train.set_index("date")

#joined_samp = joined_samp.set_index("date")

joined_test = test.set_index("date")

We can now process our data…

joined_samp.head(10)

| store | item | sales | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | ||||||||||||||||

| 2013-01-01 | 1 | 1 | 13 | 2013 | 1 | 1 | 1 | 1 | 1 | False | True | False | True | False | True | 1356998400 |

| 2013-01-02 | 1 | 1 | 11 | 2013 | 1 | 1 | 2 | 2 | 2 | False | False | False | False | False | False | 1357084800 |

| 2013-01-03 | 1 | 1 | 14 | 2013 | 1 | 1 | 3 | 3 | 3 | False | False | False | False | False | False | 1357171200 |

| 2013-01-04 | 1 | 1 | 13 | 2013 | 1 | 1 | 4 | 4 | 4 | False | False | False | False | False | False | 1357257600 |

| 2013-01-05 | 1 | 1 | 10 | 2013 | 1 | 1 | 5 | 5 | 5 | False | False | False | False | False | False | 1357344000 |

| 2013-01-06 | 1 | 1 | 12 | 2013 | 1 | 1 | 6 | 6 | 6 | False | False | False | False | False | False | 1357430400 |

| 2013-01-07 | 1 | 1 | 10 | 2013 | 1 | 2 | 7 | 0 | 7 | False | False | False | False | False | False | 1357516800 |

| 2013-01-08 | 1 | 1 | 9 | 2013 | 1 | 2 | 8 | 1 | 8 | False | False | False | False | False | False | 1357603200 |

| 2013-01-09 | 1 | 1 | 12 | 2013 | 1 | 2 | 9 | 2 | 9 | False | False | False | False | False | False | 1357689600 |

| 2013-01-10 | 1 | 1 | 9 | 2013 | 1 | 2 | 10 | 3 | 10 | False | False | False | False | False | False | 1357776000 |

joined_test

| id | store | item | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | sales | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | |||||||||||||||||

| 2018-01-01 | 0 | 1 | 1 | 2018 | 1 | 1 | 1 | 0 | 1 | False | True | False | True | False | True | 1514764800 | 0 |

| 2018-01-02 | 1 | 1 | 1 | 2018 | 1 | 1 | 2 | 1 | 2 | False | False | False | False | False | False | 1514851200 | 0 |

| 2018-01-03 | 2 | 1 | 1 | 2018 | 1 | 1 | 3 | 2 | 3 | False | False | False | False | False | False | 1514937600 | 0 |

| 2018-01-04 | 3 | 1 | 1 | 2018 | 1 | 1 | 4 | 3 | 4 | False | False | False | False | False | False | 1515024000 | 0 |

| 2018-01-05 | 4 | 1 | 1 | 2018 | 1 | 1 | 5 | 4 | 5 | False | False | False | False | False | False | 1515110400 | 0 |

| 2018-01-06 | 5 | 1 | 1 | 2018 | 1 | 1 | 6 | 5 | 6 | False | False | False | False | False | False | 1515196800 | 0 |

| 2018-01-07 | 6 | 1 | 1 | 2018 | 1 | 1 | 7 | 6 | 7 | False | False | False | False | False | False | 1515283200 | 0 |

| 2018-01-08 | 7 | 1 | 1 | 2018 | 1 | 2 | 8 | 0 | 8 | False | False | False | False | False | False | 1515369600 | 0 |

| 2018-01-09 | 8 | 1 | 1 | 2018 | 1 | 2 | 9 | 1 | 9 | False | False | False | False | False | False | 1515456000 | 0 |

| 2018-01-10 | 9 | 1 | 1 | 2018 | 1 | 2 | 10 | 2 | 10 | False | False | False | False | False | False | 1515542400 | 0 |

| 2018-01-11 | 10 | 1 | 1 | 2018 | 1 | 2 | 11 | 3 | 11 | False | False | False | False | False | False | 1515628800 | 0 |

| 2018-01-12 | 11 | 1 | 1 | 2018 | 1 | 2 | 12 | 4 | 12 | False | False | False | False | False | False | 1515715200 | 0 |

| 2018-01-13 | 12 | 1 | 1 | 2018 | 1 | 2 | 13 | 5 | 13 | False | False | False | False | False | False | 1515801600 | 0 |

| 2018-01-14 | 13 | 1 | 1 | 2018 | 1 | 2 | 14 | 6 | 14 | False | False | False | False | False | False | 1515888000 | 0 |

| 2018-01-15 | 14 | 1 | 1 | 2018 | 1 | 3 | 15 | 0 | 15 | False | False | False | False | False | False | 1515974400 | 0 |

| 2018-01-16 | 15 | 1 | 1 | 2018 | 1 | 3 | 16 | 1 | 16 | False | False | False | False | False | False | 1516060800 | 0 |

| 2018-01-17 | 16 | 1 | 1 | 2018 | 1 | 3 | 17 | 2 | 17 | False | False | False | False | False | False | 1516147200 | 0 |

| 2018-01-18 | 17 | 1 | 1 | 2018 | 1 | 3 | 18 | 3 | 18 | False | False | False | False | False | False | 1516233600 | 0 |

| 2018-01-19 | 18 | 1 | 1 | 2018 | 1 | 3 | 19 | 4 | 19 | False | False | False | False | False | False | 1516320000 | 0 |

| 2018-01-20 | 19 | 1 | 1 | 2018 | 1 | 3 | 20 | 5 | 20 | False | False | False | False | False | False | 1516406400 | 0 |

| 2018-01-21 | 20 | 1 | 1 | 2018 | 1 | 3 | 21 | 6 | 21 | False | False | False | False | False | False | 1516492800 | 0 |

| 2018-01-22 | 21 | 1 | 1 | 2018 | 1 | 4 | 22 | 0 | 22 | False | False | False | False | False | False | 1516579200 | 0 |

| 2018-01-23 | 22 | 1 | 1 | 2018 | 1 | 4 | 23 | 1 | 23 | False | False | False | False | False | False | 1516665600 | 0 |

| 2018-01-24 | 23 | 1 | 1 | 2018 | 1 | 4 | 24 | 2 | 24 | False | False | False | False | False | False | 1516752000 | 0 |

| 2018-01-25 | 24 | 1 | 1 | 2018 | 1 | 4 | 25 | 3 | 25 | False | False | False | False | False | False | 1516838400 | 0 |

| 2018-01-26 | 25 | 1 | 1 | 2018 | 1 | 4 | 26 | 4 | 26 | False | False | False | False | False | False | 1516924800 | 0 |

| 2018-01-27 | 26 | 1 | 1 | 2018 | 1 | 4 | 27 | 5 | 27 | False | False | False | False | False | False | 1517011200 | 0 |

| 2018-01-28 | 27 | 1 | 1 | 2018 | 1 | 4 | 28 | 6 | 28 | False | False | False | False | False | False | 1517097600 | 0 |

| 2018-01-29 | 28 | 1 | 1 | 2018 | 1 | 5 | 29 | 0 | 29 | False | False | False | False | False | False | 1517184000 | 0 |

| 2018-01-30 | 29 | 1 | 1 | 2018 | 1 | 5 | 30 | 1 | 30 | False | False | False | False | False | False | 1517270400 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2018-03-02 | 44970 | 10 | 50 | 2018 | 3 | 9 | 2 | 4 | 61 | False | False | False | False | False | False | 1519948800 | 0 |

| 2018-03-03 | 44971 | 10 | 50 | 2018 | 3 | 9 | 3 | 5 | 62 | False | False | False | False | False | False | 1520035200 | 0 |

| 2018-03-04 | 44972 | 10 | 50 | 2018 | 3 | 9 | 4 | 6 | 63 | False | False | False | False | False | False | 1520121600 | 0 |

| 2018-03-05 | 44973 | 10 | 50 | 2018 | 3 | 10 | 5 | 0 | 64 | False | False | False | False | False | False | 1520208000 | 0 |

| 2018-03-06 | 44974 | 10 | 50 | 2018 | 3 | 10 | 6 | 1 | 65 | False | False | False | False | False | False | 1520294400 | 0 |

| 2018-03-07 | 44975 | 10 | 50 | 2018 | 3 | 10 | 7 | 2 | 66 | False | False | False | False | False | False | 1520380800 | 0 |

| 2018-03-08 | 44976 | 10 | 50 | 2018 | 3 | 10 | 8 | 3 | 67 | False | False | False | False | False | False | 1520467200 | 0 |

| 2018-03-09 | 44977 | 10 | 50 | 2018 | 3 | 10 | 9 | 4 | 68 | False | False | False | False | False | False | 1520553600 | 0 |

| 2018-03-10 | 44978 | 10 | 50 | 2018 | 3 | 10 | 10 | 5 | 69 | False | False | False | False | False | False | 1520640000 | 0 |

| 2018-03-11 | 44979 | 10 | 50 | 2018 | 3 | 10 | 11 | 6 | 70 | False | False | False | False | False | False | 1520726400 | 0 |

| 2018-03-12 | 44980 | 10 | 50 | 2018 | 3 | 11 | 12 | 0 | 71 | False | False | False | False | False | False | 1520812800 | 0 |

| 2018-03-13 | 44981 | 10 | 50 | 2018 | 3 | 11 | 13 | 1 | 72 | False | False | False | False | False | False | 1520899200 | 0 |

| 2018-03-14 | 44982 | 10 | 50 | 2018 | 3 | 11 | 14 | 2 | 73 | False | False | False | False | False | False | 1520985600 | 0 |

| 2018-03-15 | 44983 | 10 | 50 | 2018 | 3 | 11 | 15 | 3 | 74 | False | False | False | False | False | False | 1521072000 | 0 |

| 2018-03-16 | 44984 | 10 | 50 | 2018 | 3 | 11 | 16 | 4 | 75 | False | False | False | False | False | False | 1521158400 | 0 |

| 2018-03-17 | 44985 | 10 | 50 | 2018 | 3 | 11 | 17 | 5 | 76 | False | False | False | False | False | False | 1521244800 | 0 |

| 2018-03-18 | 44986 | 10 | 50 | 2018 | 3 | 11 | 18 | 6 | 77 | False | False | False | False | False | False | 1521331200 | 0 |

| 2018-03-19 | 44987 | 10 | 50 | 2018 | 3 | 12 | 19 | 0 | 78 | False | False | False | False | False | False | 1521417600 | 0 |

| 2018-03-20 | 44988 | 10 | 50 | 2018 | 3 | 12 | 20 | 1 | 79 | False | False | False | False | False | False | 1521504000 | 0 |

| 2018-03-21 | 44989 | 10 | 50 | 2018 | 3 | 12 | 21 | 2 | 80 | False | False | False | False | False | False | 1521590400 | 0 |

| 2018-03-22 | 44990 | 10 | 50 | 2018 | 3 | 12 | 22 | 3 | 81 | False | False | False | False | False | False | 1521676800 | 0 |

| 2018-03-23 | 44991 | 10 | 50 | 2018 | 3 | 12 | 23 | 4 | 82 | False | False | False | False | False | False | 1521763200 | 0 |

| 2018-03-24 | 44992 | 10 | 50 | 2018 | 3 | 12 | 24 | 5 | 83 | False | False | False | False | False | False | 1521849600 | 0 |

| 2018-03-25 | 44993 | 10 | 50 | 2018 | 3 | 12 | 25 | 6 | 84 | False | False | False | False | False | False | 1521936000 | 0 |

| 2018-03-26 | 44994 | 10 | 50 | 2018 | 3 | 13 | 26 | 0 | 85 | False | False | False | False | False | False | 1522022400 | 0 |

| 2018-03-27 | 44995 | 10 | 50 | 2018 | 3 | 13 | 27 | 1 | 86 | False | False | False | False | False | False | 1522108800 | 0 |

| 2018-03-28 | 44996 | 10 | 50 | 2018 | 3 | 13 | 28 | 2 | 87 | False | False | False | False | False | False | 1522195200 | 0 |

| 2018-03-29 | 44997 | 10 | 50 | 2018 | 3 | 13 | 29 | 3 | 88 | False | False | False | False | False | False | 1522281600 | 0 |

| 2018-03-30 | 44998 | 10 | 50 | 2018 | 3 | 13 | 30 | 4 | 89 | False | False | False | False | False | False | 1522368000 | 0 |

| 2018-03-31 | 44999 | 10 | 50 | 2018 | 3 | 13 | 31 | 5 | 90 | True | False | True | False | False | False | 1522454400 | 0 |

45000 rows × 17 columns

The next function process the dataframe, to convert the data to the final representation to be used in the Neural network, and separates the response variable from the predictors.

df, y, nas, mapper = proc_df(joined_samp, 'sales', do_scale=True)

yl = np.log(y)

/home/adrianrdzv/anaconda3/envs/fastai/lib/python3.6/site-packages/ipykernel_launcher.py:2: RuntimeWarning: divide by zero encountered in log

#df_test, _, nas, mapper = proc_df(test, 'sales', do_scale=True, skip_flds=['id'], mapper=mapper, na_dict=nas)

df_test, _, nas, mapper = proc_df(joined_test, 'sales', do_scale=True, skip_flds=['id'],mapper=mapper, na_dict=nas)

As we can see in the next chunks of code the data is now all continuos

df.head()

| store | item | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | |||||||||||||||

| 2013-01-01 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 | 2 | 1 | 2 | 1 | 2 | -1.731103 |

| 2013-01-02 | 1 | 1 | 1 | 1 | 1 | 2 | 3 | 2 | 1 | 1 | 1 | 1 | 1 | 1 | -1.729205 |

| 2013-01-03 | 1 | 1 | 1 | 1 | 1 | 3 | 4 | 3 | 1 | 1 | 1 | 1 | 1 | 1 | -1.727308 |

| 2013-01-04 | 1 | 1 | 1 | 1 | 1 | 4 | 5 | 4 | 1 | 1 | 1 | 1 | 1 | 1 | -1.725411 |

| 2013-01-05 | 1 | 1 | 1 | 1 | 1 | 5 | 6 | 5 | 1 | 1 | 1 | 1 | 1 | 1 | -1.723514 |

df_test.head()

| store | item | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | |||||||||||||||

| 2018-01-01 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 2 | 1 | 2 | 1.733000 |

| 2018-01-02 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1.734897 |

| 2018-01-03 | 1 | 1 | 1 | 1 | 1 | 3 | 3 | 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1.736794 |

| 2018-01-04 | 1 | 1 | 1 | 1 | 1 | 4 | 4 | 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1.738691 |

| 2018-01-05 | 1 | 1 | 1 | 1 | 1 | 5 | 5 | 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1.740588 |

The validation set to be used, will be accord to the expected test set, so we will use the last three months as a validation set

val_idx = np.flatnonzero(

(df.index<=datetime.datetime(2017,12,31)) & (df.index>=datetime.datetime(2017,10,1)))

len(df.iloc[val_idx])

46000

val_idx

array([ 1734, 1735, 1736, 1737, 1738, 1739, 1740, 1741, 1742, 1743, 1744, 1745,

1746, 1747, 1748, 1749, 1750, 1751, 1752, 1753, ..., 912980, 912981, 912982, 912983,

912984, 912985, 912986, 912987, 912988, 912989, 912990, 912991, 912992, 912993, 912994, 912995,

912996, 912997, 912998, 912999])

#prueba de datos de validacion en rango correcto

df.iloc[912984]

store 10.000000

item 50.000000

Year 5.000000

Month 12.000000

Week 50.000000

Day 16.000000

Dayofweek 6.000000

Dayofyear 350.000000

Is_month_end 1.000000

Is_month_start 1.000000

Is_quarter_end 1.000000

Is_quarter_start 1.000000

Is_year_end 1.000000

Is_year_start 1.000000

Elapsed 1.702646

Name: 2017-12-16 00:00:00, dtype: float64

DL

We are going to create a new metric SMAPE https://en.wikipedia.org/wiki/Symmetric_mean_absolute_percentage_error

#Eliminar la metrica de rossman (NA's)

def inv_y(a): return np.exp(a)

def exp_rmspe(y_pred, targ):

targ = inv_y(targ)

pct_var = (targ - inv_y(y_pred))/targ

return math.sqrt((pct_var**2).mean())

max_log_y = np.max(yl)

y_range = (0, max_log_y*1.2)

max_y = np.max(y)

y_range=(0,max_y*1.2)

##F_t = y_pred A_t = targ

def SMAPE(y_pred,targ):

return (np.abs(y_pred-targ)/((np.fabs(y_pred)+np.fabs(targ))/2)).mean()

#return (math.fabs(y_pred-targ)/((math.fabs(y_pred)+math.fabs(targ))/2))

We can create a ModelData object directly from out data frame.

#md = ColumnarModelData.from_data_frame(PATH, val_idx, df, yl.astype(np.float32), cat_flds=cat_vars, bs=128,

# test_df=df_test)

#md = ColumnarModelData.from_data_frame(PATH, val_idx, df, yl.astype(np.float32), cat_flds=cat_vars, bs=128)

#md = ColumnarModelData.from_data_frame(PATH, val_idx, df, y.astype(np.float32), cat_flds=cat_vars, bs=128)

#md = ColumnarModelData.from_data_frame(PATH, val_idx, df, y.astype(np.float32), cat_flds=cat_vars, bs=128,test_df=df_test)

md = ColumnarModelData.from_data_frame(PATH, val_idx, df, y.astype(np.float32), cat_flds=cat_vars, bs=256,test_df=df_test)

cat_vars

['store',

'item',

'Year',

'Month',

'Week',

'Day',

'Dayofweek',

'Dayofyear',

'Is_month_end',

'Is_month_start',

'Is_quarter_end',

'Is_quarter_start',

'Is_year_end',

'Is_year_start']

Some categorical variables have a lot more levels than others. Store, in particular, has over a thousand!

cat_sz = [(c, len(joined_samp[c].cat.categories)+1) for c in cat_vars]

cat_sz

[('store', 11),

('item', 51),

('Year', 6),

('Month', 13),

('Week', 54),

('Day', 32),

('Dayofweek', 8),

('Dayofyear', 367),

('Is_month_end', 3),

('Is_month_start', 3),

('Is_quarter_end', 3),

('Is_quarter_start', 3),

('Is_year_end', 3),

('Is_year_start', 3)]

We use the cardinality of each variable (that is, its number of unique values) to decide how large to make its embeddings. Each level will be associated with a vector with length defined as below.

emb_szs = [(c, min(50, (c+1)//2)) for _,c in cat_sz]

emb_szs

[(11, 6),

(51, 26),

(6, 3),

(13, 7),

(54, 27),

(32, 16),

(8, 4),

(367, 50),

(3, 2),

(3, 2),

(3, 2),

(3, 2),

(3, 2),

(3, 2)]

y

array([13, 11, 14, 13, 10, 12, 10, 9, 12, 9, 9, 7, 10, 12, 5, 7, 16, 7, 18, 15, ..., 67, 67, 72, 72,

52, 86, 53, 54, 51, 63, 75, 70, 76, 51, 41, 63, 59, 74, 62, 82])

m = md.get_learner(emb_szs, len(df.columns)-len(cat_vars),

0.04, 1, [2000,800], [0.001,0.01], y_range=y_range)

lr = 1e-3

/home/adrianrdzv/Documentos/fastai/fastai/courses/dl1/fastai/column_data.py:101: UserWarning: nn.init.kaiming_normal is now deprecated in favor of nn.init.kaiming_normal_.

for o in self.lins: kaiming_normal(o.weight.data)

/home/adrianrdzv/Documentos/fastai/fastai/courses/dl1/fastai/column_data.py:103: UserWarning: nn.init.kaiming_normal is now deprecated in favor of nn.init.kaiming_normal_.

kaiming_normal(self.outp.weight.data)

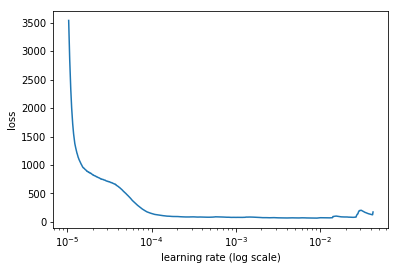

m.lr_find()

HBox(children=(IntProgress(value=0, description='Epoch', max=1), HTML(value='')))

61%|██████ | 2053/3387 [02:50<01:50, 12.08it/s, loss=435]

m.sched.plot()

With the fit function and passing the metric SMAPE we could see how our model is working in the validation set, and seeing this we could then generate our prediction for the test range

m.fit(lr, 3, metrics=[SMAPE])

HBox(children=(IntProgress(value=0, description='Epoch', max=3), HTML(value='')))

1%| | 31/3387 [00:02<04:33, 12.28it/s, loss=3.51e+03]

epoch trn_loss val_loss SMAPE

0 58.461612 62.077307 0.12835

1 57.60579 61.226561 0.128534

2 55.84363 60.653265 0.127744

[60.65326502791695, 0.12774361452849015]

#Calar mañana con este en kaggle

#m.fit(lr, 2, metrics=[exp_rmspe,SMAPE], cycle_len=3)

HBox(children=(IntProgress(value=0, description='Epoch', max=6), HTML(value='')))

epoch trn_loss val_loss exp_rmspe SMAPE

0 58.428253 60.687587 nan 0.125747

1 55.664219 58.901838 nan 0.123808

2 53.808453 56.613611 nan 0.122914

3 56.226725 56.70169 nan 0.122738

4 53.166681 56.525577 nan 0.122837

5 53.49262 56.550598 nan 0.122943

[56.550598181683085, nan, 0.1229434128092683]

#m.load_cycle()

#m.load

We could calculate the metric again to validate our model before being use to predict in the test dataset

x,y=m.predict_with_targs()

SMAPE(x,y)

0.12774362

Using the predict function with True parameter we predict in the test dataset, so we can save our results

pred_test=m.predict(True)

len(pred_test)

pred_test

array([[12.4353 ],

[15.99211],

[15.5996 ],

[16.17989],

[17.26732],

[18.59948],

[19.75835],

[13.07455],

[15.55011],

[15.71422],

[16.48752],

[17.36207],

[18.38673],

[19.4954 ],

[13.12858],

[16.1863 ],

[15.82948],

[16.28306],

[17.44085],

[18.91442],

...,

[64.77862],

[75.88193],

[76.84612],

[80.93729],

[85.14046],

[90.38946],

[96.48158],

[65.06002],

[76.08002],

[76.95865],

[80.9269 ],

[84.26495],

[89.71136],

[97.39009],

[64.57227],

[76.35717],

[75.9097 ],

[82.60927],

[86.11958],

[91.05175]], dtype=float32)

joined_test['sales'] = pred_test

joined_test

| id | store | item | Year | Month | Week | Day | Dayofweek | Dayofyear | Is_month_end | Is_month_start | Is_quarter_end | Is_quarter_start | Is_year_end | Is_year_start | Elapsed | sales | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | |||||||||||||||||

| 2018-01-01 | 0 | 1 | 1 | 2018 | 1 | 1 | 1 | 0 | 1 | False | True | False | True | False | True | 1514764800 | 12.435302 |

| 2018-01-02 | 1 | 1 | 1 | 2018 | 1 | 1 | 2 | 1 | 2 | False | False | False | False | False | False | 1514851200 | 15.992112 |

| 2018-01-03 | 2 | 1 | 1 | 2018 | 1 | 1 | 3 | 2 | 3 | False | False | False | False | False | False | 1514937600 | 15.599597 |

| 2018-01-04 | 3 | 1 | 1 | 2018 | 1 | 1 | 4 | 3 | 4 | False | False | False | False | False | False | 1515024000 | 16.179892 |

| 2018-01-05 | 4 | 1 | 1 | 2018 | 1 | 1 | 5 | 4 | 5 | False | False | False | False | False | False | 1515110400 | 17.267321 |

| 2018-01-06 | 5 | 1 | 1 | 2018 | 1 | 1 | 6 | 5 | 6 | False | False | False | False | False | False | 1515196800 | 18.599476 |

| 2018-01-07 | 6 | 1 | 1 | 2018 | 1 | 1 | 7 | 6 | 7 | False | False | False | False | False | False | 1515283200 | 19.758350 |

| 2018-01-08 | 7 | 1 | 1 | 2018 | 1 | 2 | 8 | 0 | 8 | False | False | False | False | False | False | 1515369600 | 13.074553 |

| 2018-01-09 | 8 | 1 | 1 | 2018 | 1 | 2 | 9 | 1 | 9 | False | False | False | False | False | False | 1515456000 | 15.550114 |

| 2018-01-10 | 9 | 1 | 1 | 2018 | 1 | 2 | 10 | 2 | 10 | False | False | False | False | False | False | 1515542400 | 15.714221 |

| 2018-01-11 | 10 | 1 | 1 | 2018 | 1 | 2 | 11 | 3 | 11 | False | False | False | False | False | False | 1515628800 | 16.487524 |

| 2018-01-12 | 11 | 1 | 1 | 2018 | 1 | 2 | 12 | 4 | 12 | False | False | False | False | False | False | 1515715200 | 17.362072 |

| 2018-01-13 | 12 | 1 | 1 | 2018 | 1 | 2 | 13 | 5 | 13 | False | False | False | False | False | False | 1515801600 | 18.386734 |

| 2018-01-14 | 13 | 1 | 1 | 2018 | 1 | 2 | 14 | 6 | 14 | False | False | False | False | False | False | 1515888000 | 19.495398 |

| 2018-01-15 | 14 | 1 | 1 | 2018 | 1 | 3 | 15 | 0 | 15 | False | False | False | False | False | False | 1515974400 | 13.128580 |

| 2018-01-16 | 15 | 1 | 1 | 2018 | 1 | 3 | 16 | 1 | 16 | False | False | False | False | False | False | 1516060800 | 16.186298 |

| 2018-01-17 | 16 | 1 | 1 | 2018 | 1 | 3 | 17 | 2 | 17 | False | False | False | False | False | False | 1516147200 | 15.829476 |

| 2018-01-18 | 17 | 1 | 1 | 2018 | 1 | 3 | 18 | 3 | 18 | False | False | False | False | False | False | 1516233600 | 16.283060 |

| 2018-01-19 | 18 | 1 | 1 | 2018 | 1 | 3 | 19 | 4 | 19 | False | False | False | False | False | False | 1516320000 | 17.440847 |

| 2018-01-20 | 19 | 1 | 1 | 2018 | 1 | 3 | 20 | 5 | 20 | False | False | False | False | False | False | 1516406400 | 18.914425 |

| 2018-01-21 | 20 | 1 | 1 | 2018 | 1 | 3 | 21 | 6 | 21 | False | False | False | False | False | False | 1516492800 | 19.885489 |

| 2018-01-22 | 21 | 1 | 1 | 2018 | 1 | 4 | 22 | 0 | 22 | False | False | False | False | False | False | 1516579200 | 13.303565 |

| 2018-01-23 | 22 | 1 | 1 | 2018 | 1 | 4 | 23 | 1 | 23 | False | False | False | False | False | False | 1516665600 | 16.030056 |

| 2018-01-24 | 23 | 1 | 1 | 2018 | 1 | 4 | 24 | 2 | 24 | False | False | False | False | False | False | 1516752000 | 16.096254 |

| 2018-01-25 | 24 | 1 | 1 | 2018 | 1 | 4 | 25 | 3 | 25 | False | False | False | False | False | False | 1516838400 | 16.395996 |

| 2018-01-26 | 25 | 1 | 1 | 2018 | 1 | 4 | 26 | 4 | 26 | False | False | False | False | False | False | 1516924800 | 17.516056 |

| 2018-01-27 | 26 | 1 | 1 | 2018 | 1 | 4 | 27 | 5 | 27 | False | False | False | False | False | False | 1517011200 | 18.376472 |

| 2018-01-28 | 27 | 1 | 1 | 2018 | 1 | 4 | 28 | 6 | 28 | False | False | False | False | False | False | 1517097600 | 19.416647 |

| 2018-01-29 | 28 | 1 | 1 | 2018 | 1 | 5 | 29 | 0 | 29 | False | False | False | False | False | False | 1517184000 | 13.390797 |

| 2018-01-30 | 29 | 1 | 1 | 2018 | 1 | 5 | 30 | 1 | 30 | False | False | False | False | False | False | 1517270400 | 15.469314 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2018-03-02 | 44970 | 10 | 50 | 2018 | 3 | 9 | 2 | 4 | 61 | False | False | False | False | False | False | 1519948800 | 84.911552 |

| 2018-03-03 | 44971 | 10 | 50 | 2018 | 3 | 9 | 3 | 5 | 62 | False | False | False | False | False | False | 1520035200 | 90.113197 |

| 2018-03-04 | 44972 | 10 | 50 | 2018 | 3 | 9 | 4 | 6 | 63 | False | False | False | False | False | False | 1520121600 | 94.746307 |

| 2018-03-05 | 44973 | 10 | 50 | 2018 | 3 | 10 | 5 | 0 | 64 | False | False | False | False | False | False | 1520208000 | 65.446701 |

| 2018-03-06 | 44974 | 10 | 50 | 2018 | 3 | 10 | 6 | 1 | 65 | False | False | False | False | False | False | 1520294400 | 76.037209 |

| 2018-03-07 | 44975 | 10 | 50 | 2018 | 3 | 10 | 7 | 2 | 66 | False | False | False | False | False | False | 1520380800 | 75.994850 |

| 2018-03-08 | 44976 | 10 | 50 | 2018 | 3 | 10 | 8 | 3 | 67 | False | False | False | False | False | False | 1520467200 | 80.185951 |

| 2018-03-09 | 44977 | 10 | 50 | 2018 | 3 | 10 | 9 | 4 | 68 | False | False | False | False | False | False | 1520553600 | 84.384804 |

| 2018-03-10 | 44978 | 10 | 50 | 2018 | 3 | 10 | 10 | 5 | 69 | False | False | False | False | False | False | 1520640000 | 91.049042 |

| 2018-03-11 | 44979 | 10 | 50 | 2018 | 3 | 10 | 11 | 6 | 70 | False | False | False | False | False | False | 1520726400 | 96.319534 |

| 2018-03-12 | 44980 | 10 | 50 | 2018 | 3 | 11 | 12 | 0 | 71 | False | False | False | False | False | False | 1520812800 | 64.778618 |

| 2018-03-13 | 44981 | 10 | 50 | 2018 | 3 | 11 | 13 | 1 | 72 | False | False | False | False | False | False | 1520899200 | 75.881927 |

| 2018-03-14 | 44982 | 10 | 50 | 2018 | 3 | 11 | 14 | 2 | 73 | False | False | False | False | False | False | 1520985600 | 76.846123 |

| 2018-03-15 | 44983 | 10 | 50 | 2018 | 3 | 11 | 15 | 3 | 74 | False | False | False | False | False | False | 1521072000 | 80.937286 |

| 2018-03-16 | 44984 | 10 | 50 | 2018 | 3 | 11 | 16 | 4 | 75 | False | False | False | False | False | False | 1521158400 | 85.140465 |

| 2018-03-17 | 44985 | 10 | 50 | 2018 | 3 | 11 | 17 | 5 | 76 | False | False | False | False | False | False | 1521244800 | 90.389458 |

| 2018-03-18 | 44986 | 10 | 50 | 2018 | 3 | 11 | 18 | 6 | 77 | False | False | False | False | False | False | 1521331200 | 96.481575 |

| 2018-03-19 | 44987 | 10 | 50 | 2018 | 3 | 12 | 19 | 0 | 78 | False | False | False | False | False | False | 1521417600 | 65.060020 |

| 2018-03-20 | 44988 | 10 | 50 | 2018 | 3 | 12 | 20 | 1 | 79 | False | False | False | False | False | False | 1521504000 | 76.080025 |

| 2018-03-21 | 44989 | 10 | 50 | 2018 | 3 | 12 | 21 | 2 | 80 | False | False | False | False | False | False | 1521590400 | 76.958649 |

| 2018-03-22 | 44990 | 10 | 50 | 2018 | 3 | 12 | 22 | 3 | 81 | False | False | False | False | False | False | 1521676800 | 80.926903 |

| 2018-03-23 | 44991 | 10 | 50 | 2018 | 3 | 12 | 23 | 4 | 82 | False | False | False | False | False | False | 1521763200 | 84.264954 |

| 2018-03-24 | 44992 | 10 | 50 | 2018 | 3 | 12 | 24 | 5 | 83 | False | False | False | False | False | False | 1521849600 | 89.711365 |

| 2018-03-25 | 44993 | 10 | 50 | 2018 | 3 | 12 | 25 | 6 | 84 | False | False | False | False | False | False | 1521936000 | 97.390091 |

| 2018-03-26 | 44994 | 10 | 50 | 2018 | 3 | 13 | 26 | 0 | 85 | False | False | False | False | False | False | 1522022400 | 64.572266 |

| 2018-03-27 | 44995 | 10 | 50 | 2018 | 3 | 13 | 27 | 1 | 86 | False | False | False | False | False | False | 1522108800 | 76.357170 |

| 2018-03-28 | 44996 | 10 | 50 | 2018 | 3 | 13 | 28 | 2 | 87 | False | False | False | False | False | False | 1522195200 | 75.909698 |

| 2018-03-29 | 44997 | 10 | 50 | 2018 | 3 | 13 | 29 | 3 | 88 | False | False | False | False | False | False | 1522281600 | 82.609268 |

| 2018-03-30 | 44998 | 10 | 50 | 2018 | 3 | 13 | 30 | 4 | 89 | False | False | False | False | False | False | 1522368000 | 86.119576 |

| 2018-03-31 | 44999 | 10 | 50 | 2018 | 3 | 13 | 31 | 5 | 90 | True | False | True | False | False | False | 1522454400 | 91.051750 |

45000 rows × 17 columns

We can save our results in the format specified, with the next chunk of code

csv_fn=f'{PATH}tmp/submission_4agosto.csv'

joined_test[['id','sales']].to_csv(csv_fn, index=False)